Using Linux Mint As My Daily Driver: Three Years On. Well… An Anthology Of Sub-Articles About Me Using Linux For The Past Three Years (With Some Comparisons To Windows Here And There).

Well, for those who may already be familiar with my blog and what I wrote for it over the years, it’s that time of year again. And for those who aren’t, a quick briefing.

Three years ago, I made the move from Windows 10 to Linux Mint as my proper daily driver, after replacing the sluggish hard disk drive that was in my old red Acer laptop with a solid-state drive purchased off of Amazon. I have been a passionate Linux user ever since, and I do not regret it. I started using Mint proper on the 29th of July 2021, and in the years since, I have written blog posts published on 29th July every successive year, about me using Mint and how my usage of Mint has changed since the previous years. I consider my proper move to Linux a special life event of sorts, so much so that I think of each such post as an “anniversary” post. The first such post was quite long and detailed. The second one… not so much. It was written in a last-minute rush.

So, to avoid the same thing happening this year, I started writing this year’s “anniversary” post a few days early. I did have other things I wanted to write in-between this post and my previous one, but life (and procrastination) got in the way of that. I was actually in the middle of writing a different post for this blog before this one, but that one’s taking more time than I thought it would. Still, I intend to finish it, so keep an eye out. For now, though, it’s Mint talking time, and this post will allow me to cover many things I didn’t get to cover last year or even the previous year. After a packed Monday 29th July 2024, even after planning several days ahead, I still managed to get this post published several minutes before midnight (that was lucky)! Also, since I started writing this post several days in advance, I have quite a bit a lot to talk about now that, you know, I actually know what I want to say!

SIDENOTE: Whenever I had an article with multiple headings, such as this one, I used to write my headings in camel case (with only one exception here). You may or may not have noticed it already, but this time, I decided to dispense with that and just capitalise the first letter of the first word and any proper nouns. This probably wasn’t important to mention at all, but there you go.

With the above sidenote out of the way, we’ll soon be getting to the post proper, and man! I thought the first year anniversary post was long at 15 minutes! But this one’s a 69-minute read (nice over an hour)!! I didn’t even intend for it to be that long! I just started writing, and then the post just gradually got bigger and bigger and bigger and bigger, and by the time the reading time initially surpassed the 30-minute mark, the whole post wasn’t even finished! I now consider this post a collection of sub-articles of sorts, such that each section here, except the closing section, could basically be its own article on this blog, so do try and read this post with that in mind if you’re feeling daunted by its sheer length.

And after all, as I said and/or implied in my previous post, when I’m in my element, I can write!

What has changed since my previous anniversary posts?

When I wrote my first anniversary post, I was doing my first degree at King’s College London. When I wrote my second anniversary post, I had just graduated. And I wrote that second anniversary post in a bad rush, so there’s barely anything worth quoting from there at all in retrospect. I do want to go over what I wrote for my first anniversary post before I get on to the rest of this year’s post, and I must admit that, after not reading it for so long, I’m equally surprised at how much I covered as I am at how much I left out, much of which I plan to cover this year.

Most of the “highlights” I covered when mainly talking about using Linux in my first anniversary post were to do with where I used Linux Mint in both my personal and “professional” life (about as “professional” as the life of a student can be, anyway), both of which have changed considerably since then, since (1) I’ve had more experience as a Linux desktop user and (2) I am now pursuing a different career path to what I expected at the time (more on that in the next section on my use of Linux as a PhD student).

Despite these changing circumstances, I do still stand by what I said for that “highlights” section:

- I still use Linux for my studies as a KCL student (well, duh).

- I still enjoy updating my computer using Mint’s Update Manager. If anything I like it even more so now that it handles Flatpak updates in the manager itself, where it previously only provided a way to update Flatpaks automatically upon logging in to the system, so now apt packages and Flatpaks can be updated through the Update Manager if and when updates are available.

- I don’t have to restore my system with Timeshift often, but it’s really helpful in the occasions where I have to do so, and there were a few occasions since then. The last time I used it, it did freeze on me at the reboot stage, but a simple REISUB fixed that very quickly (I may make a tutorial article about REISUB at some point) and my system was restored with nothing else harmed. Even then, Timeshift has almost always been very reliable in my experiences with it. And no, I still haven’t tried KDE Plasma again yet, though I still plan to do so at some point.

- I do still like Mint’s Software Manager, but actually, I’ve gotten more used to installing software through the terminal now! The more I’ve done it that way, the less I relied on the Software Manager to install software from apt and Flatpak repositories, as I do enjoy seeing more visibly what I’m installing (the software itself and the required dependencies, as well as some suggested and/or recommended packages to install with/alongside said software) and what is actually happening during the installation processes of both apt packages and Flatpaks (more on those later). The Software Manager absolutely has its purpose on Mint, don’t get me wrong, and I’m glad it’s there for Linux beginners to get into grips with installing software on Linux, but I honestly prefer installing software via the terminal now, which is something I myself didn’t expect to happen to me as soon as it did, but I’m more than glad that is the case now!

- I still use Wine and Proton to run certain Windows games and software. I have a separate section in this article where I go a bit further into depth about it (but not too much).

The two things I missed about Windows were (1) the relative ease when it came to Pro Audio production and (2) there being no audio latency (or much less of it, at worst) with Adobe Flash Player, but those were minor things at the time. I haven’t checked on the audio latency bug with Adobe Flash Player on Linux with PulseAudio in a long time. I may or may not have tried Pipewire with the standalone Flash Player when I had Pipewire installed. I’m actually using PulseAudio right now because I had to change laptops after my red one got borked (you may know about this already if you’ve read my previous blog post, but there’s a section in this article about it as well), but once I upgrade to Linux Mint 22 on my uni laptop, Pipewire will be the default sound server, and my previous experiences with Pipewire have actually been quite decent, so will Pipewire fix that bug or will something else do so? I, or we (?), shall see, but even if it doesn’t, it’s a very minor thing. Barely anyone uses Flash these days, and for very good reason, so I don’t feel the need to break a sweat over it. I suppose I won’t be too disappointed if that bug doesn’t get fixed anytime soon. As for Pro Audio production, I have actually started dabbling with music production again on Linux, and it wasn’t as hard or risky as I initially expected. I have written a section in this article about my experiences with music production on Linux so far, and I plan to do more of it and also write more about it in the future.

I still don’t regret switching from Windows 10 to Linux Mint, for pretty much the same reasons I didn’t regret it back then. I also don’t feel an urgent need to switch distros anytime soon, again for the same reasons I didn’t plan on switching distros back then. I do still want to try out certain distros to get a good feel of each of them, but I haven’t done so yet. That, I’m sure, will come with time, experience and a bunch of VMs.

As for switching to Windows 11… yeah, I’m not doing that.

There, that was the last thing I needed to cover from that first year anniversary post. I’m pretty sure I’ve covered everything from there now. Obviously, since then, I’ve garnered more experience in using the Linux desktop in my daily life for a broader range of purposes, and I don’t see myself reverting to Windows or switching to macOS as my daily driver anytime soon. There’s definitely more I’d like to talk about here, though, so read on!

Use of Linux for my PhD research

Now that it’s been three years, and though I’m back at KCL I’m effectively studying under a different system, I’ll be using different means to use Linux for my research work. For what I’m doing, I’m able to use Linux to do it very much seamlessly. I’ve not had to touch Wine or a VM running a different OS (yet) in my research so far. Maybe that’ll change, maybe it won’t.

Most of the software I use for all intents and purposes is already free and open source, with some exceptions. I plan to write separate articles about the tools and other software I use routinely, both for my research and for other things.

This section’s solely about what I use for my PhD studies, though, so just for the sake of this section of this article, the most important programs I use for my research, and the ones I use most often for my research, are as follows:

- KBibTeX, for managing a personal BibTeX bibliography I maintain with all the external sources I take from in my research.

- Gittyup, for tracking said bibliography, and any other (non-)relevant Git repositories with an interactive GUI.

- Nemo, Cinnamon’s file manager (the equivalent of Windows’s File Explorer).

- Yeah, yeah, I know, it’s a recondite and highly specific piece of software that very arguably should not be included at all in this list, but hey, this section’s short enough as it is, so I had to pad it somehow.

- xed, Linux Mint’s built-in text editor.

- Again, a recondite and highly specific pick that arguably shouldn’t even be on here, but I use it so often for quick editing and viewing, when I don’t need code completion, that I felt the need to include it here anyway.

- VSCodium, a truly FOSS build of Visual Studio Code without Microsoft’s VSCode branding and any other fluff (i.e. telemetry).

- I use it for more involved editing where code completion, in the form of what Microsoft call “IntelliSense”, becomes insanely helpful (both VSCodium and xed have syntax highlighting for a wide range of computer languages).

- Firefox, for scouring the web for papers and other resources to cite from.

- Yes, I browse the web with it for other purposes too, but still.

- Since I already added a few recondite picks, those being Nemo and xed, to this list, I might as well go crazy and add the GNOME Terminal, Linux Mint Cinnamon’s default terminal, to the list as well. Quite often, I do actually end up working with the terminal as part of my research, though there are several reasons for it which I won’t go too much into depth with here.

- The PhD work I am doing is involved with code found in the AgileUML repository on GitHub, AgileUML being an editor (umlrsds.jar) and its attached toolset for working with UML diagrams to generate code out of them. Inside that same repository is also the MathOCL editor (mathapp.jar) which uses some of the same libraries that AgileUML uses, hence why it’s ultimately in the same repository.

As I implied before, the above list is meant to potentially be its own blog article at some point, where I go more in depth with each of the programs and my opinions on them. All of them are free, open source and support Linux natively (so you don’t need Wine to get them working).

Yes, this section was quite short. I guess the main reason I wrote this section for this year’s anniversary post is because what I’m using now is a bit different to what I was using back when I wrote those earlier posts. There is a lot of overlap, since I’ve been doing Computer Science studies for my whole life, and what I studied at the time has some correlation with the research I carry out now as a PhD student. For example with Gittyup and VSCodium (I used to use VSCode and GitAhead before that; Gittyup itself is a fork of GitAhead after GitAhead ceased development), and of course Firefox, Nemo and GNOME Terminal, but I certainly didn’t use KBibTeX at the time because I didn’t need to, and I didn’t use xed nearly as often as I do now because I was almost always using VSCode or VSCodium for editing, and I just coped with the reasonably long start times for both. I also didn’t use AgileUML at the time because I hadn’t even heard of it at the time, but now I do. Nice.

Using Flatpaks

I remember when I first tried installing software on Linux Mint using Mint’s Software Manager. As you may already know, Mint is based on long-term support versions of Ubuntu, which is based on Debian (there’s also a version of Mint that directly bases itself off of Debian). Though Mint has some of its own packages, it also uses the apt repositories of the Ubuntu version it’s based off of. I’m using Mint 21.3, so it’s using a lot of packages from Ubuntu 22.04’s apt repositories, which are stable, rock-solid and reliable… and, a lot of the time, woefully uncurrent.

Linux Mint has built-in support for a universal package management system called Flatpak, one of three ongoing “universal packages” across various Linux distributions, the other two being Snap and AppImage. Hosting various Flatpaks at once is done via Flatpak repositories the same way DEBs are typically hosted on apt repositories, and while it’s possible to set up your own Flatpak repository, Flathub is basically the de-facto standard app repository for Flatpaks and the only one most Linux users will be using unless you’re looking to (1) host your own Flatpaks for your own projects and/or (2) attain beta/testing/nightly versions of apps on or off Flathub (Flathub Beta is one such repository, and GNOME and KDE also have their own Flatpak repositories for nightly versions of their own software).

I was hesitant to use Flatpaks at first because, while the versions of most apps on Flathub (with some exceptions) are the latest stable versions, which I definitely prefer to downloading out-of-date versions of them from Ubuntu’s apt repositories, the sandboxing and high file sizes for Flatpak downloads threw me off, so I resorted to adding several apt repositories and PPAs (a type of apt repository mainly specific to Ubuntu) to my system.

Then, at some point, I got over it. I cannot explain why I got over it, partly because I don’t really remember anymore, and I forgot exactly when I got over it, but I got over it. I started installing Flatpaks for apps that were already available on Flathub, using Mint’s Software Manager and eventually the command line to do so. I almost always use the command line to install Flatpaks and even packages from apt repositories nowadays, though I still use the Update Manager to update them, especially since it actually supports Flatpaks now (it didn’t when I first used it).

Plus, I learnt why the sizes of Flatpak downloads are so high at first. Apps have a runtime and a corresponding SDK. It will either be using the Freedesktop runtime/SDK or a derivative runtime based off of it, specifically one for GNOME, KDE and elementaryOS apps. When a Flatpak is installed for the first time, the relevant parts of that app’s corresponding runtime and/or SDK are installed alongside it. This way, as more and more Flatpaks are installed, more of the runtimes and SDKs are installed, which means the download sizes of the Flatpaks themselves get smaller and smaller. It’s actually Flatpak’s way of handling dependency hell, which works because not only can you have different versions of the same runtime(s)/SDK(s) installed if and when you need it, but also the apps themselves will have their own dependencies that are either (1) removed after the app is built (during the build process) or (2) self-contained within the app’s Flatpak sandbox (apps poke through that sandbox only if and when they need to; it’s meant to add a layer of security to them). I thought that was a genius thing when I first found this out, and I like that only what is needed from the corresponding runtime and/or SDK is installed alongside the respective Flatpak, because it’s a neat way of mitigating the large sizes (even if it still isn’t enough for some users).

I do still use native software packages for certain things. Linux Mint comes with a version of LibreOffice preinstalled, so I just use that alongside the OnlyOffice Flatpak, and even then I end up using OnlyOffice more often as it usually deals with Microsoft Office file formats better, though LibreOffice can come in handy for certain situations. Elsewhere, I still use Steam from the installed DEB package (more on Steam later), and I use apt repositories for other packages such as Wine and CMake. Firefox comes preinstalled with Mint as well. I find that Flatpaks are more useful with GUI applications that handle multiple dependencies, and that’s its biggest draw for me. For instance, some apps require GTK2, some require GTK3, some even require GTK4 now, and then there are apps for Qt4, Qt5 and now Qt6, as well as other libraries, so I find that Flatpak’s management of dependencies helps me have these multiple apps installed and avoid dependency hell as much as possible.

I also just like having the most recent stable versions of applications that usually aren’t trivial to the running of the system itself. As an ex-Windows user, I was used to being able to download the latest stable version of, say, VLC Media Player, Python or even some other programs such as emulators. I was also used to downloading almost all my external software from the internet instead of using a package manager of sorts, which is why I rarely ever touched the Microsoft Store, as much as I liked TapTiles at the time (it’s now on Steam, but you have to pay for it). Three years of consistently daily driving a Linux distribution full-time haven’t really got me out of those two ways of thinking. Generally I’m fine with still doing the latter on Linux as long as I can trust the package source (a denotion of being official always helps when downloading installers from anywhere for anywhere, really), but to have an easier way to do the former on Linux and resorting to the latter less and less is always much appreciated. Now, obviously, I’m not going to mess with the system software that my Linux Mint system absolutely requires to be a set version for in order to function properly, which is why I’m still stuck on Python 3.10 even though Python 3.12 is out. I’ve made peace with the fact that with things like Python I’m going to be a few versions behind, but that’s a separate blog article in and of itself. I don’t want to go into detail about my opinions on that here. For apps that can safely be updated to the latest stable version, I’m more than happy to be able to do so easily, and Flathub’s Flatpaks are just one way of doing that on Mint.

For the record, I do not think every app should be a Flatpak, as much as I enjoy using them and want to see how far Flatpak’s usability can be pushed, if only for the sheer novelty of such a thing. There are reasons why most distros stick to native packages for a robust, consistent, fast and easily accessible base. I think most GUI-based apps could be built into a Flatpak somehow, but CLI programs, while they are possible to package via Flatpak, should be used via other means, especially important system software that isn’t GUI based. This is mainly because running a Flatpak via the terminal is done thusly: flatpak run com.example.myapp. You can set up a shell alias in ~/.bashrc or ~/.zshrc, depending on whether or not your distro uses Bash or ZSH, but you’ll have to do that yourself for every individual Flatpak you want to run in the command line this way. For a terminal-based program, in my opinion, you’re better off installing it via a DEB, RPM or other native package, or even an installer script if one is provided officially by the app’s developers. That way, you can just run it by writing myapp to the terminal (or sudo myapp if that’s required), provided it’s accessible by the system’s PATH environment variable (hey, there’s an article idea).

One more thing: If you’re using any Flatpaks at all, one Flatpak I absolutely recommend installing is Flatseal. This lists out every Flatpak you have installed and, more importantly, the permissions each Flatpak has, and not only can you see what each app can access, but you can actually edit the permissions accordingly using Flatseal’s UI to change what it can and cannot access in your system and also in Flatpak’s sandbox, without having to pass in command-line arguments to desktop files or launcher scripts within the installed Flatpak’s files (which also makes Flatseal a safer and more robust way of changing the permissions a Flatpak has every time it’s run). This is important for apps that require access to specific locations in your drive, so that you can enable that accordingly. There’s also Warehouse, which is a more general Flatpak manager, and Flatsweep, which removes residual files from uninstalled Flatpaks, both of which I use every so often. There’s also an app on Flathub called jdFlatpakSnapshot for taking snapshots of installed Flatpaks in the event that one gets borked somehow, which as of now I haven’t installed and/or used yet, but I’m glad it’s on Flathub, and I thank JakobDev for adding many apps of his creation to Flathub.

Maintaining and building Flatpaks

Getting used to how Flatpaks worked also took me on a separate path.

See, Flathub’s Flatpak build manifests are all made publicly available on GitHub for all to see. When you click on a Flatpak’s page on the Flathub website, then scroll down and click on “Links”, there’ll be a link to the Flatpak’s manifest, appropriately labelled “Manifest” with an icon beside it and a URL to the Flatpak manifest underneath. This provides a level of transparency I’ve always appreciated from Flathub because it means one can easily see not only how a Flatpak is typically built but also what is actually being doing with a Flatpak and when that was done to it (since they’re all basically Git repositories). And, when one gains the confidence to do so, they can eventually start making changes to these Flatpaks themselves, by forking a Flatpak, then opening a pull request on the repository they forked once they commit and push the changes they need to commit and push (I may make another article on Git at some point to go over what goes on with using it). There are very few exceptions to the rule, the only one sticking out like a sore thumb being the OBS Studio Flatpak, but since that’s in OBS Studio’s actual code repository, even there it’s publicly viewable and editable. I haven’t found any Flatpak on Flathub that isn’t available for the public to see and contribute to, and I’m definitely sure there is none such Flatpak (all signs point to me being right on this one). I’ve not seen this level of transparency with the Snap Store, which is one of several problems I have with both the Snap Store and Snaps in general, but that’s a story for another time, and it might be a long one.

I had opened 2 issues on Flatpak repositories for Flathub before in 2022, but I hadn’t made any real contributions to Flathub yet. That all changed in February 2023, when I opened an issue on the Godot Flatpak’s repository. At the time, Godot 4, the next major version of Godot at the time, bringing with it several breaking changes for Godot 3 projects, had yet to reach a stable release, but that release was fast approaching (there were one or two release candidates during this time), and I was wondering how the Flatpak maintainers would go about handling the needs of both current Godot 3 users and incoming Godot 4 users (Godot 4 had a growing userbase in its own right while it was still in development). I was wondering if it would be feasible to maintain two different Flatpaks at once… so I decided to ask them just that.

Long story short: I helped update the Godot Flatpak to 4.0, then later on I helped to get a Godot 3 Flatpak up on Flathub. And a Flatpak for Godot 4 with C# support from a previously opened PR by CharlieQLE. And a Flatpak for Godot 3 with C# support, again adapting CharlieQLE’s aforementioned PR to work with Godot 3, which took a bit more work than I expected but was still a success nonetheless.

After I first updated the Godot Flatpak to 4.0, my first real contribution to an open-source project of any sort, I got inspired, to say the least. I got to updating the Processing Flatpak to the latest version when I found out it was out of date, and since then I’ve been given write permissions to that Flatpak’s repository, which I used to provide support to aarch64 systems using the arm64 binaries that the Processing Foundation provides for using the Processing IDE on ARM systems (previously the Flatpak only supported x86_64 systems, which is most systems these days but, hey, gotta look out for the little guy when you can).

And then I got crazy. As well as the aforementioned Godot Flatpaks, I worked on updating basically any Flatpak I found to be out-of-date or that didn’t have the required metadata. My GitHub profile’s public repositories are almost all forks of Flathub Flatpaks now, because I’ve been working on updating several of them since I started (and some haven’t been as successful as I hoped). I also have Flatpak Builder and Flatpak External Data Checker installed on my uni laptop where I do all my work (more on that later), so when I’m doing a more involved update to a Flatpak, or even when I’m building a new Flatpak and need to see if it works before I can try adding it to Flathub (by creating a new PR in the flathub repository), I can build a test build locally, then install it system-wide and run it to see if it works as it should and/or if something needs fixing.

I’d say the one huge mistake I made in updating a Flatpak was mistakenly updating the TeXStudio Flatpak’s runtime to 6.6 (it uses the KDE Flatpak runtime, which bases its versions numbers off of the corresponding versions of Qt rather than KDE’s own version numbering system). This meant that there wasn’t a corresponding version of the TeX Live Flatpak that it could reliably use, since the 6.7 runtime was based off of Freedesktop 22.08 unlike the 6.5 runtime which takes from Freedesktop 23.08, so the runtime update had to be reversed until it could use a version of the TeX Live Flatpak that actually corresponded to the runtime (when I tested initially, it somehow detected the 22.08 Flatpak, but didn’t install it when the test build got updated, which was weird). That was a setback, and that was on me, but you should never let it get you down too much in my opinion. I was back to making contributions to Flathub well soon. Lesson learned by me: Test more thoroughly than you already do. An extremely important lesson that some apparently still need to learn before even considering pushing any untested code to production.

Among the many many contributions I made to Flatpak repositories on Flathub are:

- Worked on fixing some AppData problems with SuperTux on Flathub, which meant that it incorrectly denoted the game as being proprietary when it’s really not (see the linked issue I took part in resolving). I also got the updated SPDX Licence Expression submitted to the upstream SuperTux repository!

- Added

aarch64support to the Greenfoot Flatpak when updating it to the latest version at the time (3.8.2). - Made some updates to the BlueJ Flatpak (which already had, and still has,

aarch64support). - Made some updates to the Strawberry Audio Player Flatpak.

- Made various updates to the Godot, Godot 3, Godot (C#/.NET), Godot 3 (C#/.NET/Mono) and Processing Flatpaks.

- Added the OpenJDK 21 LTS Flatpak to Flathub.

- Updated the runtime and version(s) of the Inky Flatpak.

- Updated the runtime of the Inform 7 IDE Flatpak to GNOME 46, which involved a bit more work than I expected and also resulted in me contributing to the upstream repository!

And the list goes on… and on… and on… and on.

Making more and more open-source contributions like this has given me more confidence to do more things in general. I mean, if I can do all that, what else can I do?

In working on maintaining Flatpaks hosted on Flathub, I also learnt to work better with others through GitHub, which is important in helping build up my teamwork skills in tech teams in general (I did have to do group projects in uni, after all), but I’d go as far as to say it’s an essential skill when working in open-source, not just through GitHub but through any other public source code repository hosting service such as GitLab, SourceForge, Codeberg, GNU Savannah and any repositories self-hosted on the internet by the program developers themselves. I realise this may not be the most controversial take out there (as well as most of this article, really), but I do feel the need to reiterate this not just for you, dear reader, but for myself as well. If I fail spectacularly to follow my own advice, and it’s made public, I shall be more than prepared to eat my own words. For now, though, I’m doing quite well with working on maintaining Flathub’s Flatpaks with others on GitHub. Good stuff 👍

Now, I still have more to do on that end (the end of open-source contributions, that is). After all, they’re just build manifests for Flatpaks. I’m probably not going to get a job out of my knowledge of how JSON, YAML, .desktop and AppData metainfo files are written and laid out. I want to make greater code contributions in programming languages such as Python and Java, which I know quite well, and even languages such as JavaScript and C#, which I don’t know that well too. I’m pretty sure I’ll get to that in time.

For now, though, I’m more than happy and proud to add “somewhat frequent Flathub and Flatpak contributor” to my CV, both informally and eventually formally when I get to updating my actual CV. I never contributed back to any open-source project while I still daily drove Windows, so as a Linux user and a user of Flatpaks from Flathub, I’m proud of what I was able to achieve for myself and my own skillset as a contributor to Flathub, and long may my contributions to Flatpaks and Flathub continue!

Having to change laptops after the fabled “red laptop” got borked

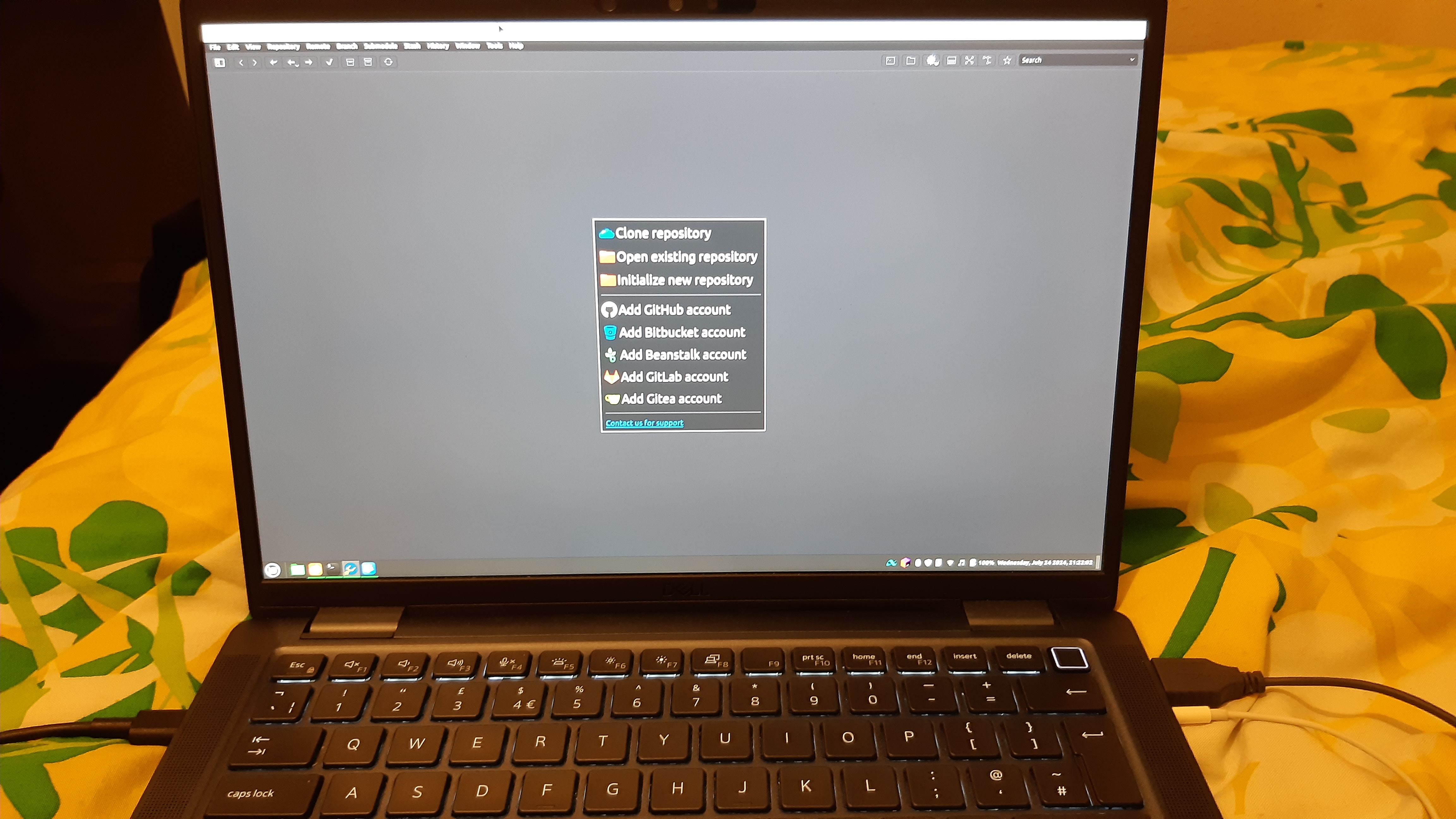

This is me using Linux Mint in 2021:

And this is me using Linux Mint in 2024:

Notice anything different about the laptop I’m using?

Yeah. It’s no longer red.

It’s also not the same laptop.

I went over why it’s not the same laptop in my previous blog post, but long story short: the red one got borked.

I’m now using my uni laptop for basically everything, but in the intervening weeks before the red one got borked and I got this one, I had to borrow the family laptop, which at the time had Windows 11 installed. I got so frustrated after a while that eventually I set up a Mint partition on there, so I can’t really say Windows 11 has been a daily driver for me during that time. Now that I no longer need the partition and dual boot on that laptop, I considered getting that partition removed at some point so that my family no longer have to go through GRUB to use the Windows partition. Then my Dad started trying out said Mint partition. I had already set up an administrator account in his name so that he could start using Mint someday, which I really hoped for but didn’t think would come, but now it has, and I very much look forward to seeing him make further progress with using a desktop Linux distribution. He already uses a Linux server in his workplace, but server/enterprise Linux and desktop Linux are two different things, so I’m excited to see the progress he’ll hopefully make in using Linux Mint with more time and experience. Someday I want to get the whole family on board with using Linux as their daily driver instead of Windows. With them, I’ll most likely have to start with Mint. Ah, one can only dream…

…Anyway, back to the topic at hand!

My uni laptop is basically my new “red laptop”, except it’s not red, it’s grey. I went over how I procured this thing in my previous post, but for those who haven’t read it, the KCL Informatics department “gave” (the inverted commas are an important indication of sarcasm in this case) me this laptop to borrow while I am doing my PhD studies, so that I can, well, do my PhD studies with it. Which I do do, you know, because I don’t really have anything else to do my PhD studies in (that at-present-dual-booting family laptop does not count as it is not mine), but I also use it for other things such as writing this blog, writing code, writing out my Flathub contributions and pushing them to forks of Flatpaks on GitHub, browsing the web, playing games and just living my life, really. The laptop initially came with a restricted version of Windows 10 preinstalled (as if Windows 10 couldn’t be any more restrictive as it is), which I didn’t want, so they let me change the BIOS password and then do a fresh clean install of Linux Mint 21.3, which is what I’m using with “my” uni laptop right now to write and publish this blog article. Mint on that laptop worked quite well by itself after installation. I just had to install some drivers afterwards to make sure everything in my laptop was being used, and that’s all done now, so it’s all good. Linux Mint’s Driver Manager helps a lot with checking for needed drivers and installing them accordingly.

One more thing I forgot to mention regarding my uni laptop, both here (until this paragraph) and in my previous blog post: I had to use the EDGE ISO to perform a fresh install of Mint 21.3 on it. When I used the normal ISO, the wifi and/or sound wasn’t working (I forget which), so using the EDGE ISO with a newer version of the Linux kernel fixed those issues, and that way I could safely install Mint on my uni laptop. Even to this day, it’s still using that newer version of the kernel on my uni laptop when running Mint, and I don’t plan on changing the kernel in use anytime soon lest something starts to break.

I’m going to miss my red Acer Aspire laptop. It was bought in 2016 as a family laptop, then in late 2018 I started using it for my own things and it eventually became my laptop, then I replaced the dead slow hard drive (it really was on its last legs by then; I had to get a different laptop just to complete some coursework on time) in 2021, then it died in 2023. Seven years for a laptop sadly seems all too rare in this woefully wasteful world of technology that’s constantly increasing in specification(s) and processing power, and for what it was it worked well and did the job. I could live with 8GB of RAM and 1TB of HDD (later SSD) storage and integrated graphics and sound, regardless of how much I somehow managed to push that 8GB of RAM. And, I’ll tell you what, that laptop, and both of the hard drives used in its lifespan, houses some really fond memories of me and my family using it over the 7 years it functioned for. That’s something that doesn’t get taken away, even if you can’t always feel a memory (d’you know what I mean?).

But since it doesn’t work anymore, I needed a replacement somehow so that, you know, I could actually continue doing my work. That’s where KCL’s Informatics department unexpectedly stepped in unexpectedly, and thank God they did! They let me borrow a Dell Latitude laptop for the entire duration of my course, and it has, well, way more than I need, really. I mean, it still comes with 1TB of nVME SSD storage and integrated graphics and sound, which is more than enough for me, but to go from 8GB to 32GB is definitely a jump for someone like me who isn’t used to readily having access to that much RAM. The laptop’s also got 10 physical cores and 12 logical ones, definitely an upgrade from the 4 cores my old red laptop’s CPU had!

Honestly, I’m just glad to have a working laptop now. Truth be told, I wouldn’t’ve minded a restored second hand laptop for a more personal long-term replacement, but for what I’m doing with my life now, the uni laptop will service me very well. And hey, I’m using it to write this blog article! How fun!

Updating between major versions of Linux Mint using mintupgrade

At the time this post goes live, Linux Mint 22, based on Ubuntu 24.04 LTS, has gone live. This is a major upgrade (we’re rounding upwards, people!!), which requires a different upgrade process from minor upgrades between point releases. As of the time of this article being published, I am still using Linux Mint 21.3, based on Ubuntu 22.04 LTS. Nothing too much is holding me back yet, except for some PPAs I’m using which haven’t had DEB packages built for Ubuntu 24.04 yet. I’ll be contacting the maintainers of those PPAs soon to see if they can update their PPAs to host packages for 24.04.

I remember when I first upgraded from Linux Mint 20.3 to Linux Mint 21. I waited a few months to actually carry out the upgrade. By then, the Mint team had released a specialised tool called mintupgrade to make upgrading between major versions of Mint easier, as that involves a radical change in package base (at the time from 20.04 to 22.04). Previously this process would’ve been more complex and would’ve required a ton of expert knowledge, so if I were upgrading, for example, from 19.3 to 20, I would’ve had to either follow this tutorial or carry out a completely fresh install. Having a semi-automated tool to handle the different package bases is very, very helpful to not only beginners but also intermediates and experts and basically anyone using Mint for its reliability and simplicity. Initially introduced with the upgrade to Mint 21, the Mint development team have already released a new version of mintupgrade for upgrading to Mint 22, as well as instructions for how to carry out said upgrade with it. I’ll be actioning the upgrade when I’m ready to do so.

When I carried out the upgrade to Mint 21, it definitely took much longer than upgrades between minor point releases. I don’t remember exactly how long it took, and I didn’t time it either, but it definitely took several hours total (the Linux Mint User Guide did say it would take “several hours”), between the configuration using the tool and the actual automated upgrade. While the automated upgrade after the configuration steps was mostly hassle-free, the crucial change in package base meant that I had to abandon at least one external apt repository that had a version for Ubuntu 20.04 but not for Ubuntu 22.04, namely the Mono Ubuntu builds repository (and as of now, it still doesn’t have a 22.04 version), though nowadays, if I absolutely need Mono (I usually use the cross-platform .NET implementation Microsoft provides these days), I’d prefer to use the Mono Flatpak directly and/or build it from source for general use (more on building software from source in the next section). I definitely wouldn’t mind trying Mono out extensively, but that’s a separate project in and of itself, and it may be worth a separate blog article someday.

I wish I remembered the details about exactly how mintupgrade worked when I used it, and also how it went, but sadly the memories of that are either hazy or they’ve completely vanished. That’s also partly why this section is considerably shorter than most of the other sections, as I have less to write about here because of it. When I eventually upgrade to Mint 22, I want to write more about mintupgrade and the upgrade process in a separate article. Hopefully it will allow me to cover some of the things about mintupgrade that I wanted to cover both this year and last year. Now, it’s down to (1) waiting for the upgrade instructions to be uploaded to the Linux Mint blog and (2) contacting some PPA maintainers on Launchpad to get some packages built for Ubuntu 24.04.

Learning to appreciate building (some) software from source

My earlier experiences with building and maintaining Flatpaks eventually exposed me to build systems in practical use.

Flatpak innately supports 6 build systems (after clicking on this link, it should take you to the “flatpak-manifest” section, then scroll down to “Module” and then “buildsystem (string)” to see them all listed):

- Standard GNU autotools (the GNU build system, your

autogen.shand/or your./configure,makeandmake install, where./configureruns a shell script calledconfigurewithout a file extension), which is the default. - CMake (then automatically running

makeandmake installafterwards). - CMake augmented with Ninja (CMake is run first, then Ninja, then

makeandmake install). - Meson (a more modern build system written in Python).

- QMake (a version of make made by the Qt folks, which can definitely be used outside of Qt).

- A “simple” build system that is basically a sandbox to run shell commands in (i.e. Godot uses SCons for its build system, so Flatpak Builder first installs SCons, then uses the

simplebuild system configuration to run SCons within the sandboxed build server).

Admittedly I haven’t come any modules using QMake yet, but with pretty much every other build system listed (yes, even the “simple” one), I’ve gained valuable experience in using them within the Flatpak sandbox. Before I worked with Flatpaks, I never made the effort to look into how build automation worked, and I was put off from building software from source because:

- I didn’t want to have to go through dependency hell just to build some programs that require different versions of the same library.

- I thought building software from source would be too complex (I think a lot of people have this perception).

- I felt I just wasn’t ready to learn how to build a functioning executable yet.

I knew how to use Make (make), specifically GNU Make with GCC, from a C++ programming module I studied back in the second year of my first degree, but I did very little C/C++ programming since then (I really should start again), so I haven’t really worked with GNU Make much throughout my career besides then.

And most of the software I used at the time was already available in binary form for use on Mint:

- On Flathub.

- In Mint’s and/or Ubuntu’s package repositories in a version that was recent enough for my tastes.

- In a different apt repository containing the latest stable versions.

- In a PPA, which is basically a different kind of apt repository.

- As a separate download via a DEB file, an installer script or a tarball/zip folder containing the executable files.

- As an AppImage, but I rarely use those, and, for that matter, I’ve never once used a Snap, especially since Mint has disabled Snaps by default (there’s a documented way to re-enable them if you want to do so).

So here’s why I recently started building some software from source:

- To finally start learning, in hopefully a bit more depth, how some of the software I use is actually built.

- To practice some of my existing knowledge of build systems, which I have acquired from building Flatpaks using Flatpak Builder and observing the terminal output.

- To get a clearer view of building software and hopefully clear out some preconceptions I had of software build processes.

- To see how far I can go with this without ending up in dependency hell.

- To start building software I may end up wanting to use that isn’t already available in binary form on current versions of Mint, Ubuntu, Debian or even Linux in general.

- To start building software I may end up wanting to use that already is available in binary form on current versions of Mint and other Linux distributions, just to see if I can build it myself without running into a barrage of build errors.

I do keep coming across numerous build errors when building software from source, both outside of and even with Flatpak Builder, and I expect more of that to happen to me, especially as I try to build older applications from source. Yet, even despite all that, I’m honestly enjoying it. As much as I don’t necessarily like having to deal with build errors and/or missing and/or clashing dependencies - and I’m sure barely anyone out there does - I’ve learnt to persevere with fixing all of those errors and getting closer and closer to compiled executables every time I run into them. Sometimes, if it gets to me too much, I take some time off to stop stressing over it and then think about how I’m going to overcome this build problem, then I go back into it and at some point it should get fixed. Nowadays, I go into building software from source with the following mindset, especially when I’m facing build errors: “Every problem has a solution.” Well, almost every problem. While there are a small number of problems that are impossible to solve, most of them, and certainly most of the problems I come across when building software, are fixable through, for example, installing a missing dependency, or patching a line of code, or leaving out certain modules in the build that I don’t necessarily need, or maybe some other things I may or may not be missing out here.

And when I do end up with a successful build from source, it really is immensely gratifying. It might just be me being relatively new and not completely accustomed to building software from source (yet), but every time a build from source is a success, especially for a particularly complex application and/or one that requires a considerable number of dependencies, I feel elated, like I’ve achieved something, you know? It’s especially worth it after facing several build problems and having to fix them just to get there, as much as I really do not want to do that! Just like with building Flatpaks, every time I build something successfully from source, I think “If I can do that, what else can I do?”

Here’s another thing that made me like Make specifically, certainly more than I liked it before, and I only found out about it after working with it in Flatpak Builder: the fact that you can run basically any build command for any build tool and/or compiler for any programming language inside a Makefile. I used to think Makefiles were strictly reserved for C and C++ only, and that I couldn’t use it with other languages and/or build tools, and I imagine I wasn’t alone in thinking this way about Make, but after seeing projects using the standard GNU build system include build commands in their Makefiles for compilers and other build tools that aren’t gcc or g++, I started to realise just how versatile Makefiles and the GNU build system could actually be. You can call anything from a C compiler to a Ruby compiler to a Pascal compiler to even javac, so you can compile .java files into .class files and then build them into a .jar file in a Makefile! You can even call, for example, dotnet build in a Makefile. I didn’t even think that was somehow possible until now, and to think it’s that easy! How cool is that?

If you’re building software from source, and you want to build a DEB or RPM, there are several ways to achieve this, but the only method I tried so far, and one I would recommend, at least for doing this locally, is using a program called checkinstall, which you should be able to install from your distro’s repositories using its package manager (in my case, I’m using Mint, so I ran sudo apt install checkinstall). I’m sure you can use build systems other than autotools with checkinstall, but checkinstall works especially well with the GNU build system. You can run it after running ./configure and make to create a DEB, RPM or even a Slackware package thusly: sudo checkinstall --install=no (you can optionally use the -D flag, which is especially helpful when you’re not on a DEB-based distro). Running sudo checkinstall by itself will actually install the package automatically for you, which may be what you want (and you can always uninstall it afterwards by running, i.e., sudo dpkg -r <package-name> on a DEB-based distro), but --install=no prevents the installation step after creating the package itself. With creating a package like this, you can install and uninstall it cleanly using dpkg or another apt-based software manager and/or DEB installer frontend (Mint comes with GDebi for installing individual DEB files, so I usually use that). I find that checkinstall definitely removed some of the misconceptions I had before building software from source, as I always wanted to package compiled software into a .DEB file but had no idea how to. I’m sure there are other more specialised ways to build DEB and RPM files, especially for build automation servers, but for my intended use on my system and my system alone, checkinstall works just fine, and it should work just fine for you too.

Matter of fact, if you’re a beginner to building software from source and want to learn how Make works, one package I’d recommend building from source is Lua. Yes, Lua the programming language. Its source code is incredibly small, and building it with make is quick and easy. There’s no ./configure step here, and running make clean all local will generate the compiled files (make all), starting afresh (clean) and creating a local directory (local) in the source code directory called install, for you to browse through what happens when you run (sudo) make install. With a basic knowledge of how a Unix-like such as Linux works, you can visualise where in your system some files from the install folder, after having run make clean all local, will be installed to when you run make install (for example, whatever’s in install/bin will go to /bin, where / in the latter case is the root directory of your whole system). You can make the build even quicker by adding a -j flag followed by a number (i.e. -j 8), so Make now uses that many threads to perform build steps more frequently (I may cover concurrency and/or parallel builds in a separate article). Once you build and install Lua, you should be able to use it straight away, and uninstalling Lua this way is as simple as (sudo) make uninstall. This should give you a very basic overview of building a C-based software package with little to no external dependencies. No wonder it’s one of the more popular languages to embed to games for modding and other scripting purposes!

While still a Windows user, I tried building an executable out of my A-level coursework written in Python using some libraries from PyPi, but in all my attempts, the executable ended up being too large for me to send over WhatsApp at the time, so I abandoned this endeavour, and as far as I know, any executables I have built for my A-level coursework are now lost to history. Other than that, truth be told, I never did build software from source when I was a Windows user. Back then, I always found the process of building software from source intimidating. Besides, in most cases there was rarely ever a need to do so, since the software I needed would be provided as an installer or as a portable package, whether that’s a single executable or an extractable zip folder. I downloaded Cygwin and MinGW-w64 back while I was still a Windows user, but I never really learnt how to use either of them properly, and by the time I switched to Linux it was already too little too late. I actually did try building MonoDevelop once with Visual Studio, and I ran into a problem that resulted in the whole build failing, so there’s that.

Other than that, most of my experience with building software has been on Linux, and I’m glad to be getting more and more experienced, confident and comfortable with them. I’m definitely hoping to find uses for not just the GNU build system but also CMake (with or without Ninja), Meson, SCons and even QMake. Even if I don’t use some or even any of them in any serious projects, I suppose it would definitely be nice just to learn for the sake of it because why not? Either way, this definitely helps me understand the build processes for much of the software I come across a lot more than I used to understand previously. It also means I am more open to building some programs from source in more circumstances than before, and that’s always an improvement in my book! Mind you, having a laptop that’s capable of actually building some more complex programs from source helps considerably when getting more into building from source. I learnt that the hard way when I tried to compile Godot from source on my old red laptop, and every time I tried it, my whole computer would freeze at some point! Meanwhile, my uni laptop can build Godot from source without running into that same issue!

Steam, Wine and Proton

Last year’s post also had a brief mention about gaming with Steam on Linux, specifically with Proton to run games made for Windows. This time, I’d like to go a bit more in depth with this topic, but not too much, since this section could effectively be another article in and of itself. This section gives me a chance to cover what I didn’t get to cover last year due to lack of time.

See, Linux by itself is lovely, especially for servers and most enterprise software, but one of the main drawbacks of Linux’s deployment in the consumer desktop market, arguably the biggest one apart from a perceived complexity and higher difficulty curve for beginners (we were all beginners once, you know), is the relative lack of mainstream software supported for it compared to Windows and MacOS, such as the Office/Microsoft 365 suite and Adobe’s Creative Cloud, however controversial both may be among many people across different software proficiency and expertise levels. This isn’t necessarily the fault of any Linux users and/or Linux kernel maintainers. Far from it! It’s more to do with the fact that software publishers don’t port their most used Windows and/or MacOS applications to Linux, despite ever increasing demand for those applications (and here I’m mainly taking aim at big ones such as Adobe, Apple and Microsoft; smaller software companies understandably may not have the means to do so).

Now, one could turn to virtual machines, using software such as VirtualBox or QEMU (most Linux users prefer the latter for its speed and efficiency; it’s also available on other OSes too). Or one could turn to PC emulators. Or, more importantly, one could turn to Wine. Wine is not an emulator. It’s a compatibility layer. Unlike an emulator or VM, it runs on top of Linux to allow Windows software to be installed to a “prefix”, a specific folder with similar structure in its subdirectories to that of a Windows system (one of the main folders is called c_drive), while also granting that software, and the prefix hosting it, access to certain files and folders on the rest of your system, things that emulators and VMs most likely won’t readily have access to and may/must have to be configured to do so (the “My Documents” folder in a typical Wine prefix is a symbolic link to the “Documents” folder in the home directory this prefix is housed in, usually ~/.wine (where ~ is shorthand for the home directory, /home/insert-username-here)).

I already use Wine to run the OpenMPT tracker music editor (and player, for most of my usage of it, anyway) and Bejeweled, Bejeweled 2 and Bejeweled Twist, the latter 3 of which I downloaded from a single CD-ROM I got as a gift in 2013, at the grand old age of 11, called “Bejeweled Collection”, which video game publisher Mastertronic used to sell at the time, before they went out of business in 2015. I use the official WineHQ binaries they provide for Ubuntu using their own apt repositories.

Wine is awesome, especially for running (usually older) Windows programs on Linux. Most Linux users will have installed Wine at some point. Thing is, it’s not perfect, especially with newer Windows programs, and you can check the Wine Application Database (AppDB) to see if an application is listed on there and, if so, whether or not it works with Wine, and/or if you need to tweak anything to make it work. There are also things like Winetricks, Bottles and PlayOnLinux to help augment and/or boost Wine’s usability.

And then there’s Proton, a version of Wine that Valve Software works on and uses for its Steam frontend for Windows-only games and software (mostly games).

Steam on Linux has come a long way since Steam’s Linux client was first launched in 2013. In fact, gaming on Linux has definitely come a long way since then, and Valve’s investment in Linux has certainly played a monumentally huge part of the advancements that desktop Linux has made in the gaming and game development space. Part of this growth can be attributed to an increase in games that natively support Linux, as well as modern game engines on the market increasingly adding support for publishing games made with them on Linux systems, such as Unity, Unreal, Godot (of course) and countless others. The creation of the Linux distro SteamOS in 2013, as well as its massive overhaul in 2022, particularly changing its base from Debian to Arch Linux (btw), must have helped things as well, especially the new Arch-based SteamOS after its inclusion in the Steam Deck as its main OS. An arguably more important thing, however, is the eventual creation and inclusion of the aforementioned Proton (which does indeed run on SteamOS). The Steam launcher has automatically enabled support for Windows games that have officially been verified to work on Proton by Valve themselves, most likely through back-and-forths with the developers and/or publishers, but by opening the Steam launcher, clicking the Steam logo and/or wordmark at the top-right corner of the launcher window, then clicking “Settings”, then going to “Compatibility” on the newly opened settings window and finally clicking “Enable Steam Play for all other titles” to turn it on, you can theoretically run any Windows-only game using an installed version of Proton, and Steam should install a version of Proton for you once you enable this setting.

Proton does work with a surprisingly large number of Windows-only games working just fine without changing anything, but while this does mean you can try launching a Windows game through Steam on Linux using Proton, do bear in mind that it isn’t guaranteed to work with every single Windows-only game (at least not yet). Some Windows-only games may need to be tweaked somehow, while some other games may just not work at all. There’s a website you can check out called ProtonDB, to see if your game works with Proton and if you need to change anything first before you get it to run properly. There’s also at least one Steam Curator that shows some games that work well with Proton and/or what you need to do to get them working (if it’s possible to get them to work at all), and there are other smaller Steam curators that do the same thing.

In my personal experiences with Proton, I almost always managed to get my Windows-only games to work on Proton with no issues, or very few minor ones if there are any. The only game that doesn’t run on Proton in quite the same way it runs on Windows is Chip’s Challenge, but even then that’s just the music stopping sporadically. The game itself plays just fine. There’s also Zuma’s Revenge working with Proton 5 and not Proton 6 when I wrote about it two years ago (I’m not sure if that is the case now, but it certainly was the case back then), but even that’s fixed by just installing Proton 5 and going through some settings to make sure Zuma’s Revenge uses that specific version of Proton. Yes, you can have multiple versions of Proton installed at once via Steam, and games can be configured to use one version of Proton and not the other.

Overall, I’m pleased with how much we can do with Wine and Proton, and I’m amazed at how much both have achieved and also how much Linux gaming and desktop Linux in general have achieved because of both of them. Obviously, progress in certain places still needs to be made for both of them, but even then, both Wine and Proton are opening a lot of doors to so many potential Linux users who may be held back by the need to use certain software on Windows and/or macOS that isn’t readily supported on Linux. Someday apps supporting Linux alongside Windows and macOS will become the new norm in all of software development, as it already is for many (but not all) apps, but sadly we still have a long way to go before that happens. Until then, I shall continue to extol the virtues of Wine and Proton to Linux users just starting out, and long may the successes of Wine and Proton continue!

One more thing: There’s also a similar thing in ongoing development for running macOS programs on Linux (that haven’t been fully ported to GNUstep) called Darling, but that started way more recently than Wine did and is still very much a work in progress. Still, keep an eye out on them. At this time, they’re definitely making decent progress, and I look forward to seeing how well it goes for them!

Music production on Linux: How it started and how it’s going (for me, anyway)

Lastly, I want to talk about music production, which I dabble in here and there. I haven’t released anything yet, and I don’t plan to anytime soon. For the moment, at least, I just dabble in music production mainly for myself as a hobby, at least when I’m not partaking in my other hobbies like, well, listening to music. Well, I got an 7 (A equivalent) in my Music GCSE, so I had to put it to use somehow, right?

For tracker music on Windows, I used OpenMPT. For tracker music on Linux, I use… OpenMPT. With Wine. As briefly described in the previous section. I definitely want to try out Schism Tracker and MilkyTracker and Buzztrax and Renoise and several other trackers on Linux at some point, but I’m used to OpenMPT, and it works well enough for me, so I guess if it ain’t broke, don’t fix it. Words to live by, eh?

Before I switched to Linux, my first experience with a Digital Audio Workstation was with GarageBand on the iMacs in my sixth form’s music department, and also on a Macbook owned by one of my uncles. I found GarageBand to be quite nice indeed, especially for something that’s free to use, but it doesn’t run on Windows, or even Linux, for that matter. When I first started making non-tracker-based tunes on Windows, therefore, I turned to Cakewalk by BandLab, a totally free DAW that’s basically a continuation of the paid Sonar DAW, originally created by the now defunct music software company Cakewalk, hence the new name once the DAW got picked up by BandLab Technologies. When I switched from Windows to Linux, I knew it could be used with Wine somehow. I just didn’t get around to it because (1) I was so busy with other things and (2) I eventually started experimenting with native Linux DAWs.

I’ve tried out two of them, specifically: Ardour and LMMS. I actually made a song with LMMS back when I was a Windows user, but I’ve definitely used it much more on Linux. There’s also other DAWs out there that I’d love to try out someday, but I can’t give them honest reviews until I’ve actually tried them.

SIDENOTE: I’m neither counting Audacity nor Tenacity in this case, as both are audio editors and while they’re awesome for what they are (I use Tenacity nowadays, especially after Audacity ran into some trouble in 2021), they’re not full-fledged DAWs in their handling of MIDI and VSTs, as well as differing features between audio editors and DAWs in general. With that out of the way, it’s back to talking about Ardour and LMMS.

Admittedly, it’s been quite a while since I used Ardour. I installed the Ardour Flatpak on my old red laptop and then made one track with it as a personal exercise using some Virtual Studio Technology plugins (VSTs) I installed on my red laptop. The DAW actually locks onto your system’s audio output (and, I presume, its input too), even when the DAW itself is idly running in the background, and that is a feature, not a bug, so I had to deal with it, but it was otherwise really nice to work with. Coming over from Cakewalk, I was able to adapt to Ardour and its user interface very well, and I remember gradually picking up on where most of the things I needed were. I remember Ardour being quite light on my resources, and I certainly don’t remember any crashes while I was working on a song, which is always a plus. Of course, the more tracks and instruments there are, and the more of them being used simultaneously at certain points in a song, the more of any system’s resources will be used, but for what it is Ardour shouldn’t hurt my computer too much, if it will even hurt it at all. I also like that Ardour calculates your song’s dynamic range when it exports your track to an audio format (such as WAV), and gives you a Dynamic Range index when the export’s done. As someone who wants to make sure his music doesn’t fall into the trappings of the loudness war, unless it’s a stylistic choice, I find this quite helpful and I very much appreciate that it’s there. Support for Linux VSTs and other LADSPA and LV2 plugins installed in my system is also a huge plus (I don’t have any plugins globally installed on my uni laptop yet), but they have to be installed first as Ardour doesn’t really come with its own preinstalled instrument plugins.

I enjoyed using Ardour a lot while I was using it and I definitely want to work with it more, though, if I can confess, the lock on the system’s audio I/O, and the need for external instrument plugins installed, do leave casual mid-work usage off the table somewhat, at least for me. I don’t necessarily mind the lack of built-in instrument plugins in Ardour, as there are plenty of free ones I can install, and plenty of places to look for them too, even though I don’t have any plugins installed on my uni laptop (yet). However, I do care quite a bit about a DAW not having complete control of audio I/O, and the main reason for this is that I sometimes like to listen to music while I’m using the DAW, whether it be for inspiration or even to listen to a song, or parts of it, when I’m making a cover of said song to make sure I’m getting the notes and chords right, which I find can be really helpful, much more than you’d expect, and I don’t really want to be having to use a separate device to listen to a song while I’m using my DAW to make a song. If there is a way to remove Ardour’s lock on the system audio I/O without breaking it then someone please let me know because I’d love to see that happen. Still, though, this doesn’t taint my overall high opinions towards Ardour so far, and I definitely look forward to making more and more tunes with it. Well, provided I actually make myself make more music with Ardour!

As for LMMS, well, I definitely made myself make more music with that! Not only does LMMS not have a lock on the system audio, and I detailed why I like it when a DAW doesn’t do that in the above paragraph, but it also comes with a bunch of instrument plugins out-of-the-box, as well as several presets for those instruments and even some samples to use with its built-in AudioFileProcessor plugin. Quite often, for me, at least, the urge to get my creative and musical juices flowing by making a song is reasonably quick-ish, sometimes even spontaneous, so to be able to do that easily and casually with minimal fuss (if any) is always appreciated, and, at least for instrumental electronic music, LMMS does just that and does it perfectly. The many presets already included with LMMS for most of its built-in instruments provide readily available patches for almost any type of synth and/or sound I may be looking for in a song, even though I definitely want to make my own patches for some instrument plugins at some point in the future (I used to make a few of my own patches for TAL Noisemaker, back when it had the old UI, which I found to be quite intuitive when learning synth patch creation). It’s also quite light on my system resources, like Ardour, and, from my experience, at least, even more so than Ardour. Its UI has its own CPU monitor, and it almost never shows the CPU being fully used, if even at all. Again, more involved and intense simultaneous usage of several instruments, plugins and tracks in any point in a song will lead to more of any system’s resources being used by LMMS, but even then, I never feel my system’s resources being strained when LMMS or even Ardour is running, especially on my uni laptop.

LMMS does have its drawbacks, though. The main drawback is that it doesn’t support onboard audio recording yet. You can have audio tracks in an LMMS project and load audio recordings onto it, but you cannot record audio from an input using LMMS. This is a huge drawback for making certain types of music with LMMS that may contain live vocals and/or live instruments, as, well, you can’t record them with LMMS. The common way to include live recordings of such things with LMMS is to make as much of the backing track in LMMS as you can, then export the song (as either the whole song or the individual tracks used for each instrument and/or beat/bassline), then open Audacity/Tenacity and record your vocals and/or live instruments there, before exporting the track again after that. Yeah, Ardour is definitely more convenient for working with recorded vocal and/or instrument tracks alongside MIDI tracks. Also, while I certainly appreciate LMMS’s built-in AudioFileProcessor plugin, and I’m glad it exists, I do find it limiting in certain edge cases. Don’t get me wrong, AudioFileProcessor can play back samples at a MIDI-controlled pitch perfectly fine, and it does have support for basic sample manipulation - you can reverse it, enable/disable normal and ping-pong loops and continue playback of it across notes - but for some more specific and involved cases, such as playing the sample from the start and then looping between two specific points, which you’d want to do for pianos, for example, you’re better off installing a different sample playback plugin with those features and adding that to your LMMS project.

Still, though, for what it’s capable of, I find LMMS to be a good DAW for instrumental electronic music. I didn’t mention this earlier, but I do think it’s a lot more simple to use than Ardour, especially for beginners just getting into electronic music creation and production (I had some experience beforehand so Ardour was intuitive enough for me, but you (yes, you, dear reader) may end up having different experiences to mine). Yes, LMMS has fewer features than Ardour, and that’s partly why it’s simpler to use, but it’s also simpler to use because of the way everything is laid out, which makes it far more intuitive to learn by trying out and learning its basic features visually, aurally and quickly, before eventually getting stuck into making music with it at your own pace. The relative ease of using LMMS for making songs compared to Ardour, thanks to the abundance of provided instruments, effects and samples, and also the fact that it doesn’t lock onto your system’s audio input and output, means I can get stuck into making tunes with it pretty much instantaneously, while I’m doing something else such as browsing the web or even listening to a song to get a good reference point for making a cover of said song. I also find LMMS quite fun to use, again for all of the reasons I mentioned in my previous sentence. I know for some it’s a niche reason for one to like a DAW, but when I’m in my element with LMMS, I honestly really enjoy using it, whether it be for something serious or just to mess around with. I think it’s a perfectly valid reason to like something, not least a DAW. Don’t you?

As for what I would recommend, as of the time this post goes live, for instrumental electronic music production, it should come as no surprise at all that I would wholeheartedly recommend LMMS. I mean, I’ve written more on it for this article than I have for Ardour, because I’ve had way more experience with LMMS, and I ended up having more to say about it as a result. Still, though, give Ardour a shot, especially for the types of music LMMS isn’t necessarily suited for, and for which Ardour will most certainly work better than LMMS. You might like Ardour. I certainly do. I do wish I had more to say about Ardour, and I also want to use it more often. It certainly can be used for instrumental electronic music production, but I do hope you understand why I prefer LMMS for this purpose, at least for the moment. Maybe my opinions on Ardour and LMMS will change later.

There are other options out there for music production on Linux, both proprietary and even open source, but I haven’t tried any of them yet. Other than Ardour and LMMS, there’s Qtractor, MusE, Rosegarden, Zrythm and Radium. For Zrythm, Radium and Ardour, note that, while both are fully open source, the developers charge money for official binaries with all the features (I use the Ardour Flatpak, which builds Ardour from source and thus still has all of the features, but I recently started donating to Ardour to support its development when possible). Other than all of those DAWs, Traverso DAW is a DAW whose binary downloads are quite hard to seek out, but the Git repository for it shows that it is still being developed so I’m personally keeping an eye out on it. If you’re willing to use proprietary software, there’s also Bitwig Studio, REAPER and Tracktion Waveform, all of which provide free trial versions that have certain limitations and/or restrictions to their usage. You could even try running certain Windows DAWs under Wine and/or Proton, such as Cakewalk. I heard Ableton Live works quite well under Wine!

I do plan to try out some of those other options at some point, as well as any other Linux DAWs I happen to find out about in the future, but if nothing else, I hope I have proved to you, in this final section of my anniversary post, that making music with Linux is certainly possible, and not as difficult as even I expected it to be. I’ll keep working with both LMMS and Ardour, I’m sure, and I may as well try out some other options for Pro Audio and music production on Linux, not least the ones I’ve listed in the previous paragraph. Rest assured, this is not the end of my experiments with music production on Linux. Maybe this could well be the start for you.

Myself? I just wanted to see if making any sort of music on Linux was possible. I pondered that in my first anniversary post, published a year after I abandoned Windows and switched to Linux for life. Two years later and I have achieved just that. I have made progress and am very happy indeed!

And that’s it!

Phew!

This one took a looooooooooooooong time to write, and it’s my largest article yet, so I’m glad I started writing it several days in advance. I’m also glad that I still managed to publish it before July 29th ended (for me, at least) in 2024, (again) several minutes before midnight! I’m sure there’s still some material I haven’t covered here that I’ll likely regret not covering here later, but for a routine thing, I’m quite satisfied with what I put out for this year. Who even knows if the articles for subsequent years will be larger or even smaller? Maybe smaller, as I wasn’t really planning on writing so much for this year’s anniversary post, but I ended up using it as a means to cover what I didn’t get to cover last year because the previous anniversary post was so last minute.

I actually wanted to write another section for this article about trying out various tools and other things on Linux, but it probably wouldn’t integrate as well with all the other sections of this article, and I think it should be its own article in its own right, and maybe even its own site page outside of the blog as well. Who knows?

Until then, I hope you enjoyed reading this leviathan of a blog post, or, rather, this anthology of sub-articles, as much as I have thoroughly enjoyed writing all of it. I definitely do not expect future blog posts to be anywhere near as long as this one was, but who really can say? Maybe I’ll start writing and the balloon will grow as big as this one did! I was actually in the middle of writing at least one other blog post that I planned to publish before this one, but that one’s not even finished yet, and I do want to finish and upload it at some point, so do watch this space.

I try not to look back too often on my history as a user of computers, which, yes, is as nerdy a thing to do as it sounds, but it was fun to see not just how my usage of Linux has change since I moved, but also how much my own usage of computers in general has changed over the years. Not only am I contributing more to certain areas in open source, but I’m also using the terminal more for (i.e.) installing software and running certain commands, as well as getting more involved with building software from source in general and working with it in certain creative areas. I look forward to seeing how I (hopefully) mature and evolve as a Linux user next year. I still have a lot of things to try out for myself, and as for this blog, well, despite how much I wrote for this post, there’s still a lot for me to write about here, and I hope you’ll stick around.

So thank you so much for reading, and until next time, peace!